The AI Boom-Bust

Is the AI boom good or bad for cities and offices? Yes.

It's been one of those weeks. One after the other, product launches and news reports made it clear that the AI boom is alive and well. Things are moving at an imaginable speed, with consequences that will take decades to unravel.

OpenAI is rolling out new features that allow people to talk — using their voice — with ChatGPT rather than just typing. ChatGPT can now also "see" images, describe what is in them, and refer to them in conversation. It sounds less dramatic in theory than it is in practice. In the few days since the feature was launched, people have already used it to turn hand-drawn sketches into coded websites, to get interior design recommendations for their living rooms, to figure out the location of an image based on its content, to decipher illegible documents, to explain complex diagrams, and explain inscrutable memes.

ChatGPT can now see, hear, and speak. Rolling out over next two weeks, Plus users will be able to have voice conversations with ChatGPT (iOS & Android) and to include images in conversations (all platforms). https://t.co/uNZjgbR5Bm pic.twitter.com/paG0hMshXb

— OpenAI (@OpenAI) September 25, 2023

ChatGPT is also once again able to access the internet and seek information and is no longer limited to data from before September 2021. As ChatGPT exceeds its digital boundaries, it seeks ways to reach further into the physical world. OpenAI is reportedly in discussions with iPhone designer Jony Ive about a new hardware device that would take ChatGPT's capabilities out of the browser/app —and out of the mobile ecosystems of Apple and Google or the VR ecosystems of Apple and Meta. Softbank's Masayoshi Son, the world's boldest investor, is also rumored to be involved as a potential funder of the OpenAI-Ive collaboration.

While OpenAI is contemplating an AI-powered device, Meta is already launching one. It's a pair of sunglasses. Mark Zuckerberg awkwardly announced a new generation of smart sunglasses in partnership with Ray-Ban. As a first step, users can speak to Meta AI from their sunglasses to retrieve information and seek advice. In a few months, a software update would enable Meta AI to "see" what the user is looking for and provide practical help in real-time. Some of the examples Zuckerberg showcased included translating signs in real-time, providing instructions on how to fix a leaky faucet, and providing more information about whatever building you're looking at.

Meta’s smart glasses can take calls, play music, and livestream from your face pic.twitter.com/zXDyUxHeW9

— The Verge (@verge) September 27, 2023

Meta also announced a new lineup of celebrity-inspired chatbots that users can interact with. Each chatbot has a unique character and area of experience, including music, travel, and sports. To spice things up, Meta modeled the look (and perhaps feel) of each chatbot on a related celebrity, including Tom Brady, Paris Hilton, Snoop Dogg, and Charli D'Amelio. It also introduced new ways for users to generate and manipulate images they share.

The most exciting product update from Meta was delivered indirectly. Zuckerberg was a guest on the Lex Friedman Podcast. Instead of a regular video or voice recording, Zuck and Lex met in the... metaverse, meaning they both wore VR headsets and headphones and interacted through digital avatars. The avatars were hyper-realistic, mimicking the looks and even the facial expressions of their "controllers." Watch the first 60 seconds of the following video to understand what it all means.

Here's my conversation with Mark Zuckerberg, his 3rd time on the podcast, but this time we talked in the Metaverse as photorealistic avatars. This was one of the most incredible experiences of my life. It really felt like we were talking in-person, but we were miles apart 🤯 It's… pic.twitter.com/Nu8a3iYWm0

— Lex Fridman (@lexfridman) September 28, 2023

With all these new AI use cases, we'll need plenty more data centers and plenty of energy to keep them up and running. Microsoft is reportedly looking to build its own nuclear reactors for this purpose. The US Nuclear Regulatory Commission recently certified a new type of reactor design, and Microsoft is the first tech giant to (try to) integrate it into its plans:

Microsoft is specifically looking for someone who can roll out a plan for small modular reactors (SMR). All the hype around nuclear these days is around these next-generation reactors. Unlike their older, much larger predecessors, these modular reactors are supposed to be easier and cheaper to build.

While the new design might be cheaper and hopefully safer, it requires new supply and waste management systems. Still, the fact that one of the world's largest companies is exploring nuclear technology is an important signal. I am generally wary of atomic energy, but my impression is that we're already exposed to the risks since the world has plenty of (mostly old) reactors and thousands of nuclear warheads. And since we're already exposed to the downside, we should maximize our exposure to the upside and make more and better use of atomic energy. Other forms of clean and renewable energy are becoming cheaper and more reliable. Still, they will take years to catch up with current energy demand — let alone with the probable explosion of demand that AI and electric cars unleash.

Finally, there was another bit of news this week that had nothing — and everything — to do with AI. Bloomberg reported that OnlyFan's total number of creators has risen 47% to 3.2 million, and users rose 27% to 239 million. As I pointed out earlier:

OnlyFans can be used in a variety of ways, but it is most notable for its use by sexual performers who charge their fans for access to racy content. But OnlyFans is not merely a porn site; instead, users pay for personalized messages from their idols, videos that mention their names, and for having a say on what happens next.

What does this have to do with AI?

The Real Virtual Reality

In a recent interview, technology analysts Ben Thompson and Craig Moffett discussed why people often treat technology, media, and telecommunications (TMT) as a single sector. As Thompson points out, what unifies these three industries is that they all require massive upfront investment — developing software, producing a film, or rolling out cables. But once these costs are sunk, businesses in the TMT sector enjoy "near perfect scalability" and can grow their revenue with "zero marginal costs" — meaning, every new person who watches a movie or uses a phone network contributes more revenue but barely costs anything to serve.

Ben made a profound point about the specific nature of each component of TMT:

Each of these three categories, though, is distinct in the experience they provide:

Media is a recording or publication that enables a shift in time between production and consumption.

Telecoms enables a shift in place when it comes to communication.

Technology, which generally means software, enables interactivity at scale.

Each of these provides a form or an aspect of virtual reality. Recorded media enables you to consume something that was produced earlier, at a different time. Telecommunications allows you to interact with people who are in a different place. And software allows you to interact within a virtual space. In other words, media offers virtual time, telecom offers virtual place, and software provides virtual and personalized interaction — each of them offers an aspect of virtual reality. Still, none of them provides a complete virtual reality. TMT, as we know it, is constrained, particularly by one specific factor. Thompson continues:

The constraint on each of these is the same: human time and attention. Media needs to be created, software needs to be manipulated, and communication depends on there being someone to communicate with. That human constraint, by extension, is perhaps why we don’t actually call media, communication, or software “virtual reality”, despite the defiance of reality I noted above. No matter how profound the changes wrought by digitization, the human component remains.

AI, says Thompson, removes this human constraint. It does so by enabling the convergence of TMT. We can now develop products that allow creative work and software to deliver continuous interactive and personalized experiences. In other words, instead of just watching a movie, talking to a human, or interacting with software, we are now able to (or will soon be able to) do all three at the same time: Interact in a personalized manner with a creatively produced character powered by software. The building blocks of this convergence were all over the news this week — realistic avatars, celebrity-inspired chatbots, and AI models that can see and talk.

The result of this convergence is not just better or more entertaining products but the removal of the human constraint. And without this constraint, products can become more scalable than ever — people can spend more time and money interacting in deeper, more useful, and meaningful ways with things that were built by (or with the permission of other humans.

I closed my earlier news roundup with OnlyFans' record growth because adult entertainment has historically been an important, erm, breeding ground for emerging technologies and user behaviors (video streaming, online payments, file-sharing, compression). In this context, it is easy to visualize what Thompson is talking about and the unlimited potential for users to lose themselves inside products that offer unprecedented realism and interactivity.

The distant future I've been writing about over the past three years is arriving sooner than expected. And so are its consequences.

The shape of things to come

New and old celebrities can scale their income by licensing their likeness and personality in new ways. They can deploy a chatbot and realistic avatar that talks one-on-one with multiple fans in parallel. We tend to underestimate the extent to which technology makes all human jobs scalable (See Rise of the 10X Class and The Scalable Imagination).

Humans can also collaborate to create whole new characters who will "work" by providing services to other humans. This sounded farfetched when I wrote about it in 2020, but it is happening on Facebook and Instagram right now.

AI enhances remote work, and remote work enhances AI. Digital communication is becoming richer and more immersive and might soon be more intense and life-like than in-person interaction. Meanwhile, as more work is done online, it becomes easier for AI "agents" to perform more tasks than were previously done by humans. Remote work enables AI to take on more jobs. Stronger AI enables more humans to work remotely. And the flywheel keeps spinning. (See AI and Remote Work: A Match Made in Heaven)

Imagination is the ultimate constraint on human creation. AI enables anyone with an idea to generate the necessary code and design and distribute their product to billions of people without leaving home or waiting for anyone's help. As I pointed out in Unleash the Crazy Ones:

AI will do to software what Instagram and TikTok did to photos and videos. They will enable every crazy person with an idea to launch their own "machine." What is the software equivalent of Gangnam Style or Donald Trump? I don't know, but we're about to find out.

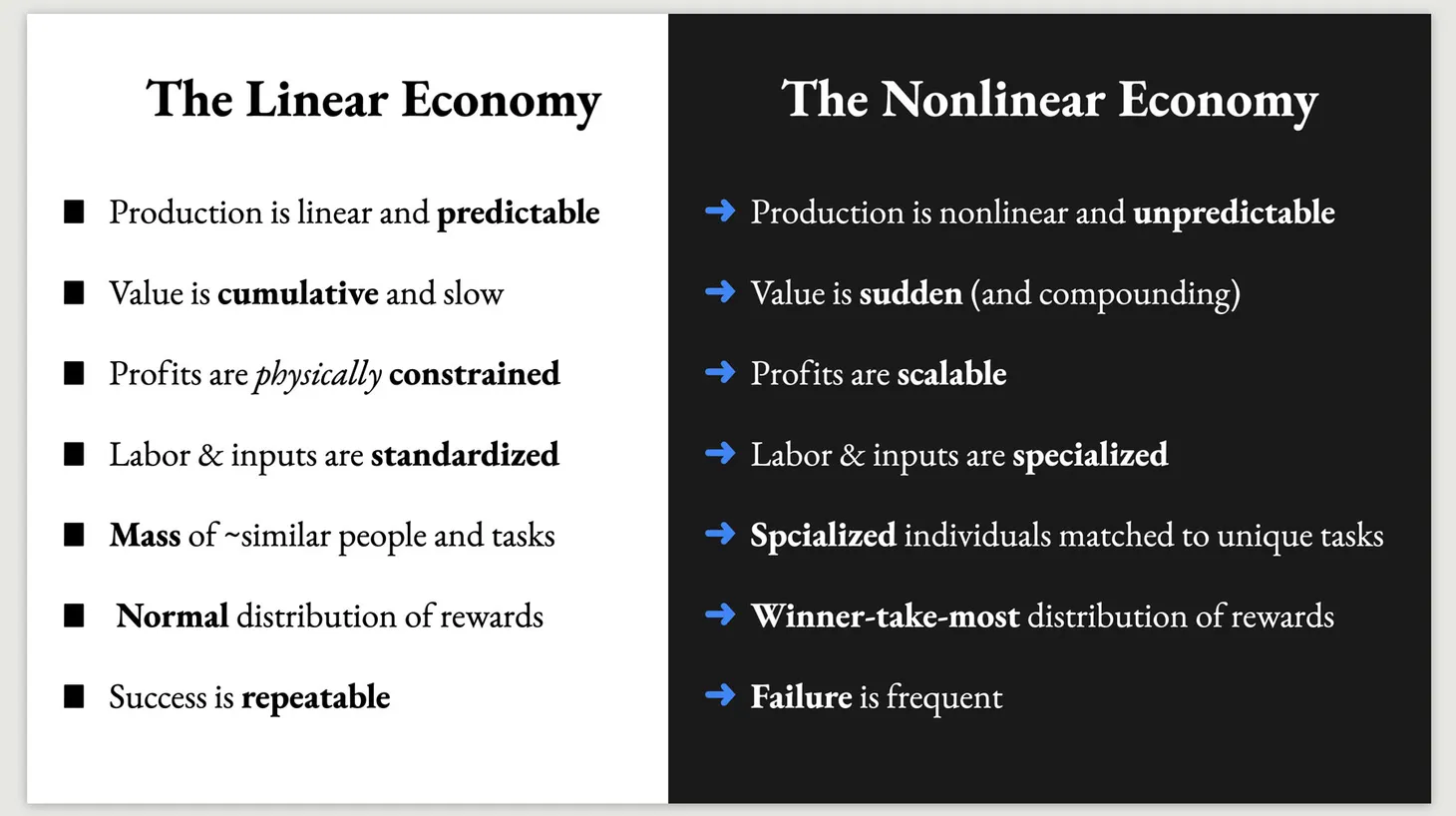

More broadly, the economy is becoming increasingly non-linear, surrounded by rapid change, not sure which of our actions matter or what to do to produce the next valuable product or service, at the mercy of algorithms and social dynamics that crown winners based on pure chance, and dethrone them equally fast and for equally opaque reasons. As I pointed out in It's the Company, Stupid:

The real drive for more flexibility comes from companies themselves and, more broadly, from the changing nature of capitalism. Some bosses get it, and some bosses don't, but the simple reality is that it is no longer possible to plan your workforce and office needs too far in advance.

The non-linear nature of work is also behind the blurring boundaries of firms and industries:

Companies like Microsoft and Apple are hiring employees in more locations and relying more heavily on on-demand, contract, and part-time employees. The larger the company and the more competitive the industry, the more likely it is to rely more heavily on flexible and remote employees — in percentage and absolute terms.

Most of these changes happen online, but their impact will be felt in the physical world. What does this mean in practice?

The San Francisco Preview

The media is full of stories about people returning to San Francisco to participate in the AI gold rush. All those remote workers who migrated elsewhere or stayed home are now rushing back to the city center to attend events, collaborate in person, and find their next co-founder or job.

Landlords and corporate bosses are leveraging these anecdotes to fuel a San Francisco comeback story for the ages. Here's Salesforce CEO Marc Benioff on X:

The anecdotes are true. The AI boom is real, and some people are moving (back) to San Francisco. I also have no reason to doubt JLL's estimate of AI companies seeking up to 1 million square feet of office space.

The problem is what happens in the rest of the city. About 30 million of office space in San Francisco is currently vacant. That's 30 times what the AI boom requires presently. And we can expect plenty more space to become vacant as pre-Covid leases come up for renewal over the next 18 months.

More importantly, AI may bring back investor money and tax revenue. But it brings back fewer people. As a dozen tech industry professionals told Reuters:

"unlike past tech booms that have touched San Francisco, the generative AI craze brings fewer jobs, because AI firms excel at staying lean and automating work."

So, is this a boom or bust story? It's both. And it's not restricted to San Francisco. The city on the bay is a microcosm and a preview of the future. We're heading to a world where fewer people generate more and more economic activity. Meanwhile, the environment around them collapses and becomes less safe and less hospitable to a growing share of the population. Flush with cash from the booming tech sector, the government becomes more powerful and less effective.

The AI explosion is welcome and offers great promise. It must be accompanied by an explosion of policy innovations that enable society to adapt and thrive. Most of our institutions (corporations and governments) seem intent on holding onto 20th-century ideas of what is "normal" and "expected."

But the 20th Century isn't coming back. San Francisco is already here — it's just not evenly distributed. We can do better.

Best,

Elsewhere on the Internet:

- What will replace Facebook? A few weeks ago, I wrote that "the product with the highest likelihood of killing Twitter is not a Twitter clone but a platform with AI-generated engagement that answers the same needs but 10x better." Following Meta's latest announcement, Casey Newton observed that this is indeed what Meta is trying to build:

Meta plans to place its AI characters on every major surface of its products. They have Facebook pages and Instagram accounts; you will message them in the same inbox that you message your friends and family. Soon, I imagine they will be making Reels.

And when that happens, feeds that were once defined by the connections they enabled between human beings will have become something else: a partially synthetic social network.

- Tokyo is the honey badger of cities — a street-level deep dive into the planning quirks that make Tokyo vibrant, safe, and affordable. Also see my 2017 article, Future of Real Estate? Look to Japan

- New York City is legalizing denser housing near transport stations, reducing parking requirements, and rezoning multiple blocks to allow offices to become housing. 2.5 years ago, I wrote a whitepaper that circulated around NYC government and prescribed all of the above.

- A talk by Bill Gurley about regulatory capture and the potential impact of AI regulation on innovation and consumers.

- Are AI companies hiring poets? The surprising (few) new jobs created by AI. Rhymes with Universal Basic Leisure: Why It Makes Sense to Let People Do Whatever They Want.

- You should read more about parking. Start with this M. Nolan Gray review of Paved Paradise: How Parking Explains the World by Henry Grabar.

- Does Sam Altman know what he's creating? A deep dive from The Atlantic about OpenAI, including interviews with Altman and other key people.

I research technology's impact on how humans live, work, and invest.

💡Book a keynote presentation for your next offsite, event, or board meeting.

🔑 Become a Premium Subscriber to unlock subscriber-only content, online meetups, and more.

❤️ Share this email with a friend or colleague

Dror Poleg Newsletter

Join the newsletter to receive the latest updates in your inbox.